屏幕后处理效果(screen post-processing effects)是游戏中实现屏幕特效的常见方法。

建立一个基本的屏幕后处理脚本系统

渲染完得到屏幕图像后对图像进行一系列的操作,抓取屏幕可以使用OnRenderImage函数

MonoBehaviour.OnRenderImage(RenderTexture src,RenderTexture dest)

渲染得到的图像存储在第一个参数里,处理后再将目标渲染纹理(第二个参数)显示到屏幕上。利用Graphics.Blit函数来完成对渲染纹理的处理

public static void Blit(Texture src,RenderTexture dest,Materil mat,int pass=-1)

src代表原纹理,dest是目标渲染纹理,值为null会直接将结果显示在屏幕上。mat是使用的材质,材质使用的shader将会进行屏幕后处理操作,pass为-1代表调用shader内所有pass,否则只调用给定索引的pass。

OnRenderImage函数会在所有不透明和透明的pass结束后被调用,但如果想不对透明物体有影响,可以在函数前加ImageEffectOpaque属性来实现

实现屏幕后处理过程:在摄像中添加脚本(实现OnRenderImage函数,再调用Graphics.Blit函数使用特定shader来完成处理)再把返回的渲染纹理显示到屏幕上。对于一些复杂的屏幕特效,可能需要多次调用Graphics.Blit函数来对上一步输出的结果进行下一步的处理。

注意在实现屏幕后处理前需要检查条件:支持渲染纹理和屏幕特效、是否支持需要使用的shader,创建一个屏幕后处理的基类,继承后再实现不同的操作即可:

using UnityEngine;

using System.Collections;

[ExecuteInEditMode]

[RequireComponent (typeof(Camera))]//需要绑定在某个相机上

public class PostEffectsBase : MonoBehaviour {

// Called when start

protected void CheckResources() {

bool isSupported = CheckSupport();

if (isSupported == false) {

NotSupported();

}

}

// Called in CheckResources to check support on this platform

protected bool CheckSupport() {

if (SystemInfo.supportsImageEffects == false || SystemInfo.supportsRenderTextures == false) {

Debug.LogWarning("This platform does not support image effects or render textures.");

return false;

}

return true;

}

// Called when the platform doesn't support this effect

protected void NotSupported() {

enabled = false;

}

protected void Start() {

CheckResources();

}

//指定一个shader用于处理

// Called when need to create the material used by this effect

protected Material CheckShaderAndCreateMaterial(Shader shader, Material material) {

if (shader == null) {

return null;

}

if (shader.isSupported && material && material.shader == shader){

return material;

}

if (!shader.isSupported) {

return null;

}

else {

material = new Material(shader);

material.hideFlags = HideFlags.DontSave;

if (material)

return material;

else

return null;

}

}

}

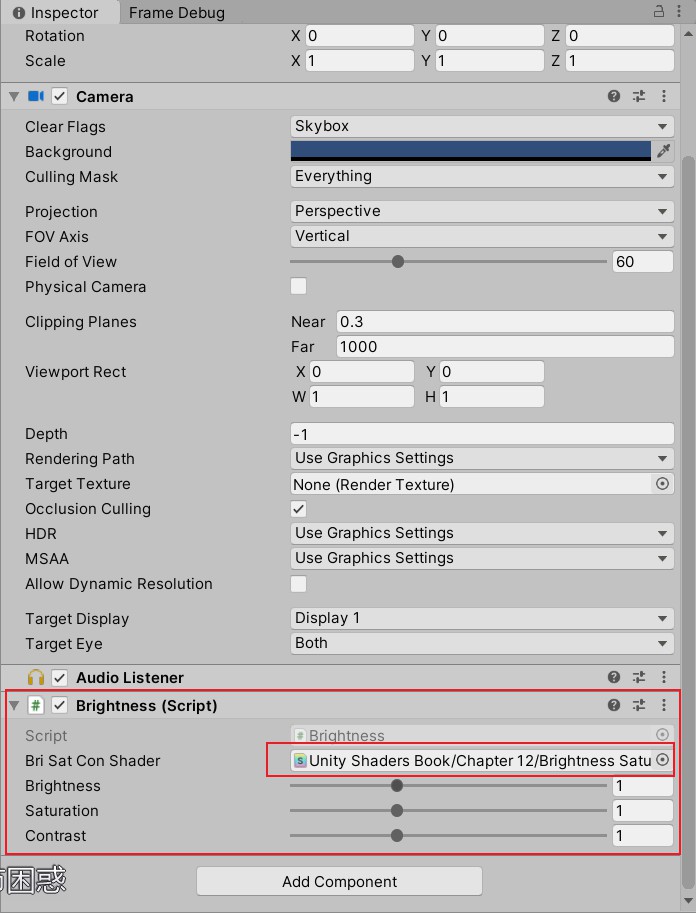

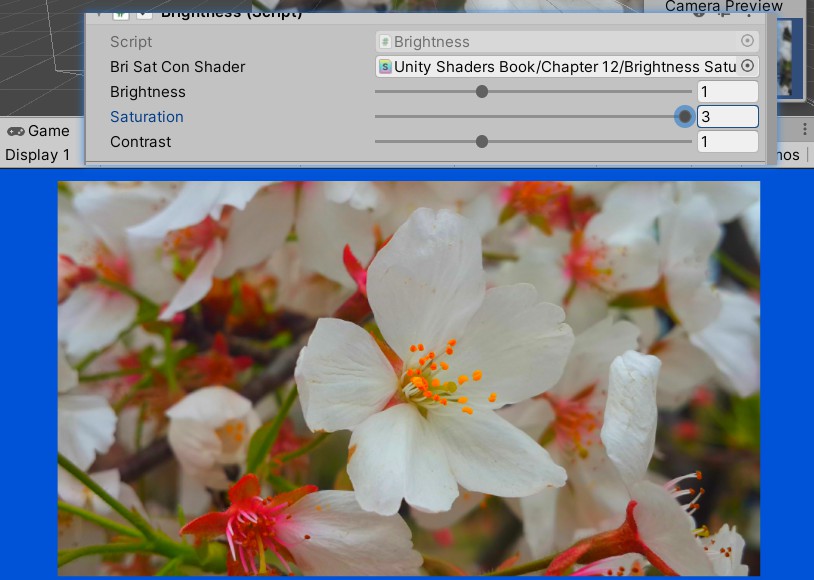

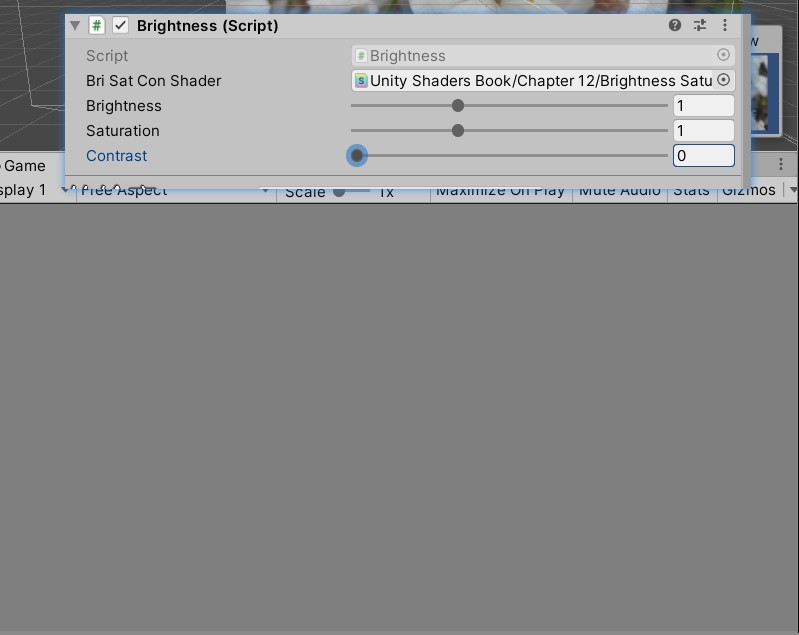

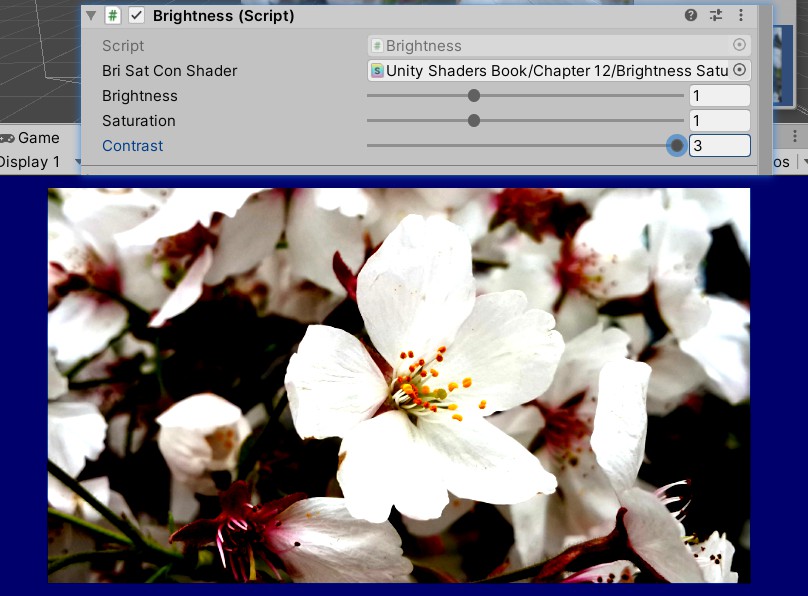

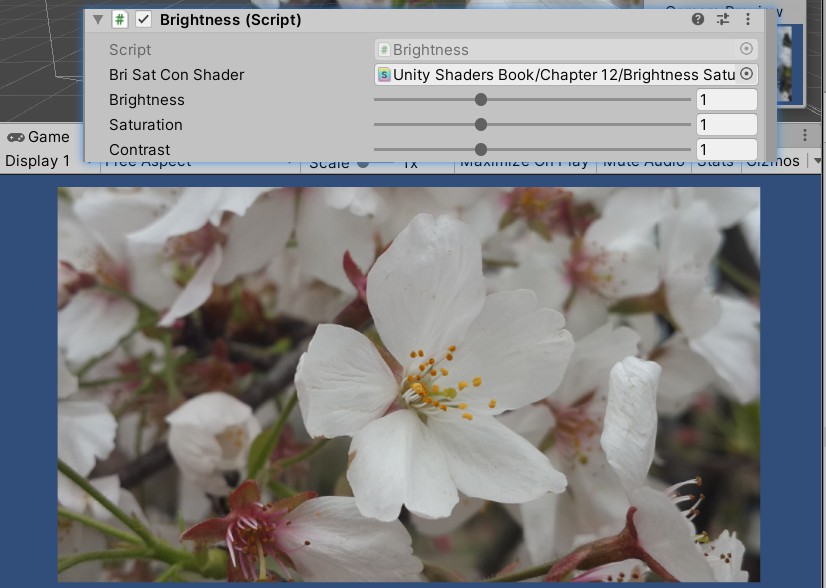

调整屏幕的亮度、饱和度和对比度

选择一张纹理(纹理类型修改为sprite),拖到场景中摆放好

为相机新建脚本:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

//继承自之前的基类

public class Brightness : PostEffectsBase

{

public Shader briSatConShader;//声明需要的shader,并根据该shader创建相应的材质

private Material briSatConMaterial;

public Material material

{

get

{

briSatConMaterial = CheckShaderAndCreateMaterial(briSatConShader, briSatConMaterial);

return briSatConMaterial;

}

}

//参数

[Range(0.0f, 3.0f)]//确定合适的变化区间

public float brightness = 1.0f;

[Range(0.0f, 3.0f)]

public float saturation = 1.0f;

[Range(0.0f, 3.0f)]

public float contrast = 1.0f;

void OnRenderImage(RenderTexture src, RenderTexture dest)

{

if (material != null)

{

//把参数传递给材质

material.SetFloat("_Brightness", brightness);

material.SetFloat("_Saturation", saturation);

material.SetFloat("_Contrast", contrast);

Graphics.Blit(src, dest, material);

}

else

{

Graphics.Blit(src, dest);

}

}

}

新建shader:

Shader "Unity Shaders Book/Chapter 12/Brightness Saturation And Contrast" {

Properties {

//可以省略,因为只是用来显示在材质面板,但是对于屏幕后处理,材质都是临时创建的。

_MainTex ("Base (RGB)", 2D) = "white" {}

_Brightness ("Brightness", Float) = 1

_Saturation("Saturation", Float) = 1

_Contrast("Contrast", Float) = 1

}

SubShader {

Pass {

ZTest Always Cull Off ZWrite Off//在场景中绘制了一个四边形面片,为防止对其他物体的影响,需要关闭一些东西。

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

sampler2D _MainTex; //必须有该参数用于接收需要处理的纹理图。

half _Brightness;//这些值会由脚本传递得到

half _Saturation;

half _Contrast;

struct v2f {

float4 pos : SV_POSITION;

half2 uv: TEXCOORD0;

};

v2f vert(appdata_img v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

o.uv = v.texcoord;

return o;

}

fixed4 frag(v2f i) : SV_Target {

fixed4 renderTex = tex2D(_MainTex, i.uv);

// Apply brightness

fixed3 finalColor = renderTex.rgb * _Brightness;

// Apply saturation

fixed luminance = 0.2125 * renderTex.r + 0.7154 * renderTex.g + 0.0721 * renderTex.b;//亮度值

fixed3 luminanceColor = fixed3(luminance, luminance, luminance);//饱和度为0的颜色

finalColor = lerp(luminanceColor, finalColor, _Saturation);//插值得到希望的饱和度颜色

// Apply contrast

fixed3 avgColor = fixed3(0.5, 0.5, 0.5);//对比度为0的颜色

finalColor = lerp(avgColor, finalColor, _Contrast);//插值得到希望的对比度颜色

return fixed4(finalColor, renderTex.a);

}

ENDCG

}

}

Fallback Off

}

然后将shader拖到相机刚才写好的脚本中(脚本的面板也可以设置shader参数的默认值后拖到相机上):

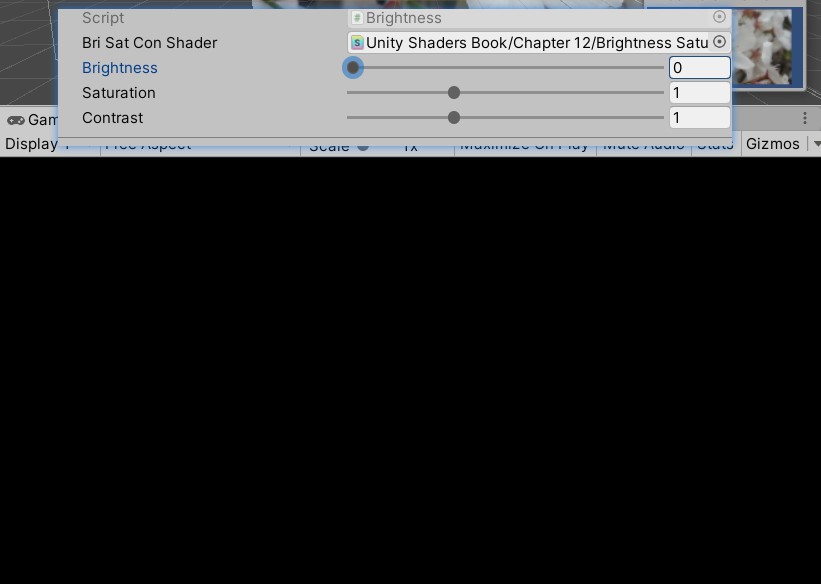

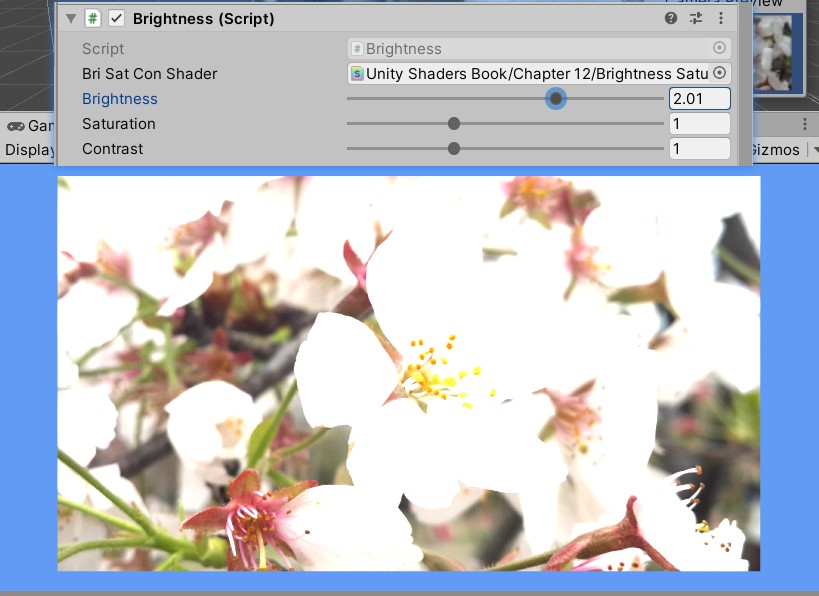

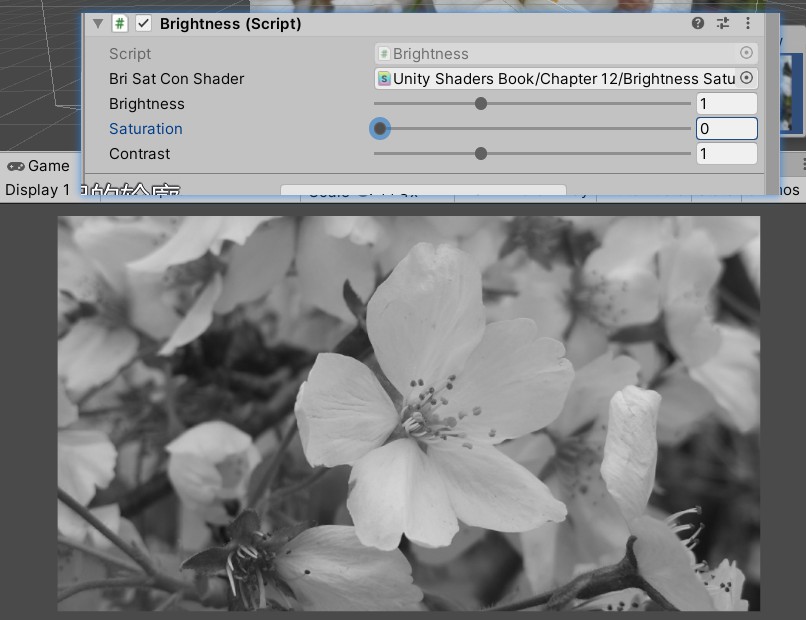

效果:

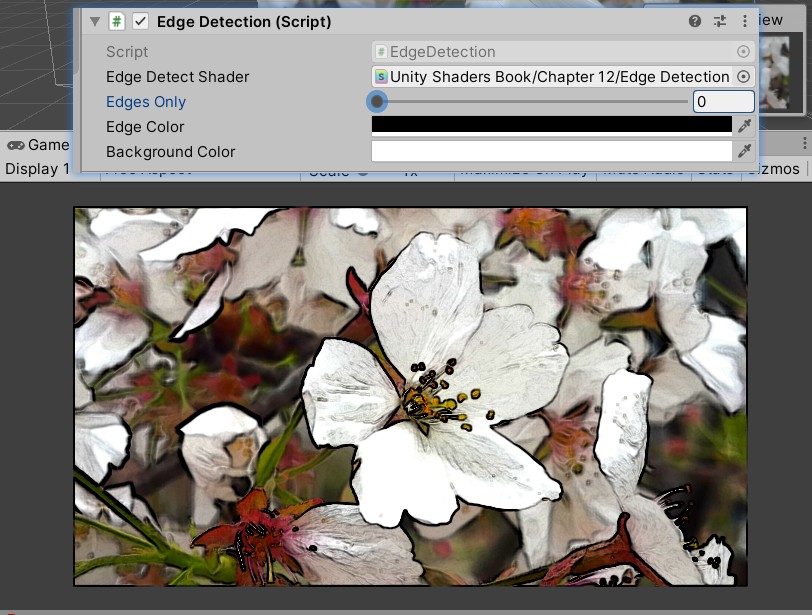

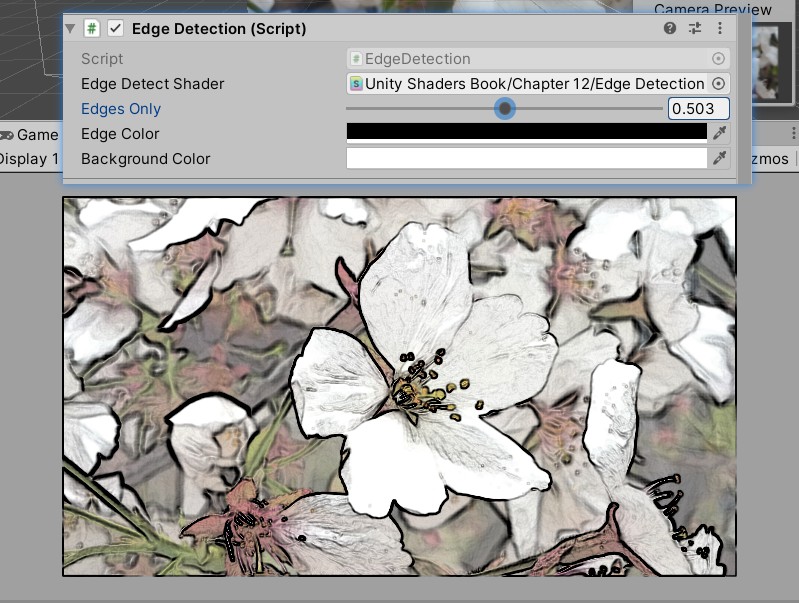

边缘检测

是描边效果的一种实现方法,边缘检测的原理是利用一些边缘检测算子对图进行卷积(convolution)操作。

什么是卷积:卷积操作就是使用卷积核(kernel)对一张图像中的每个像素进行一系列操作

例如对图像进行均值模糊,使用一个3×3的卷积核,核内每个元素的值均为1/9

常见的边缘检测算子:相邻像素之间存在明显的颜色、亮度、纹理等差别,他们之间就会有一条边界,这种相邻像素之间的差值可以用梯度(gradient) 来表示,类似于计算突变,梯度绝对值越大说明更有可能是边缘点。

每一种都包含两个方向的卷积核,分别用于检测水平方向和竖直方向上的边缘信息,进行边缘检测时对每个像素分别进行一次卷积计算,得到两个方向上的梯度值,整体的梯度值的平方为这两个梯度的平方和。出于性能考虑,采用绝对值来计算:G=|Gx|+|Gy|,G用来判断是否对应边缘。

摄像机的脚本:

using UnityEngine;

using System.Collections;

public class EdgeDetection : PostEffectsBase {

public Shader edgeDetectShader;

private Material edgeDetectMaterial = null;

public Material material {

get {

edgeDetectMaterial = CheckShaderAndCreateMaterial(edgeDetectShader, edgeDetectMaterial);

return edgeDetectMaterial;

}

}

[Range(0.0f, 1.0f)]

public float edgesOnly = 0.0f;//0:边缘叠加在图中,1:只显示边缘

public Color edgeColor = Color.black;

public Color backgroundColor = Color.white;

void OnRenderImage (RenderTexture src, RenderTexture dest) {

if (material != null) {

material.SetFloat("_EdgeOnly", edgesOnly);

material.SetColor("_EdgeColor", edgeColor);

material.SetColor("_BackgroundColor", backgroundColor);

Graphics.Blit(src, dest, material);

} else {

Graphics.Blit(src, dest);

}

}

}

shader:

Shader "Unity Shaders Book/Chapter 12/Edge Detection" {

Properties {

_MainTex ("Base (RGB)", 2D) = "white" {}

_EdgeOnly ("Edge Only", Float) = 1.0

_EdgeColor ("Edge Color", Color) = (0, 0, 0, 1)

_BackgroundColor ("Background Color", Color) = (1, 1, 1, 1)

}

SubShader {

Pass {

ZTest Always Cull Off ZWrite Off

CGPROGRAM

#include "UnityCG.cginc"

#pragma vertex vert

#pragma fragment fragSobel

sampler2D _MainTex;

uniform half4 _MainTex_TexelSize;

fixed _EdgeOnly;

fixed4 _EdgeColor;

fixed4 _BackgroundColor;

struct v2f {

float4 pos : SV_POSITION;

half2 uv[9] : TEXCOORD0;

};

v2f vert(appdata_img v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

half2 uv = v.texcoord;

//_MainTex_TexelSize每个纹素的大小,从左上角开始按行计算

o.uv[0] = uv + _MainTex_TexelSize.xy * half2(-1, -1);

o.uv[1] = uv + _MainTex_TexelSize.xy * half2(0, -1);

o.uv[2] = uv + _MainTex_TexelSize.xy * half2(1, -1);

o.uv[3] = uv + _MainTex_TexelSize.xy * half2(-1, 0);

o.uv[4] = uv + _MainTex_TexelSize.xy * half2(0, 0);

o.uv[5] = uv + _MainTex_TexelSize.xy * half2(1, 0);

o.uv[6] = uv + _MainTex_TexelSize.xy * half2(-1, 1);

o.uv[7] = uv + _MainTex_TexelSize.xy * half2(0, 1);

o.uv[8] = uv + _MainTex_TexelSize.xy * half2(1, 1);

return o;

}

fixed luminance(fixed4 color) {

return 0.2125 * color.r + 0.7154 * color.g + 0.0721 * color.b; //亮度值

}

half Sobel(v2f i) {

const half Gx[9] = {-1, 0, 1,

-2, 0, 2,

-1, 0, 1};

const half Gy[9] = {-1, -2, -1,

0, 0, 0,

1, 2, 1};

half texColor;

half edgeX = 0;

half edgeY = 0;

for (int it = 0; it < 9; it++) {

texColor = luminance(tex2D(_MainTex, i.uv[it]));//邻近的某个像素颜色

edgeX += texColor * Gx[it];//每个像素对应卷积核中的某个值,进行乘,叠加到某方向的梯度值中。

edgeY += texColor * Gy[it];

}

half edge = 1 - abs(edgeX) - abs(edgeY);

return edge;//这里的edge越小越可能是一个边缘点

}

fixed4 fragSobel(v2f i) : SV_Target {

half edge = Sobel(i);

fixed4 withEdgeColor = lerp(_EdgeColor, tex2D(_MainTex, i.uv[4]), edge);

fixed4 onlyEdgeColor = lerp(_EdgeColor, _BackgroundColor, edge);

return lerp(withEdgeColor, onlyEdgeColor, _EdgeOnly);

}

ENDCG

}

}

FallBack Off

}

效果:

为了得到更加准确的边缘信息,往往会在屏幕的深度纹理和法线纹理上进行边缘检测。

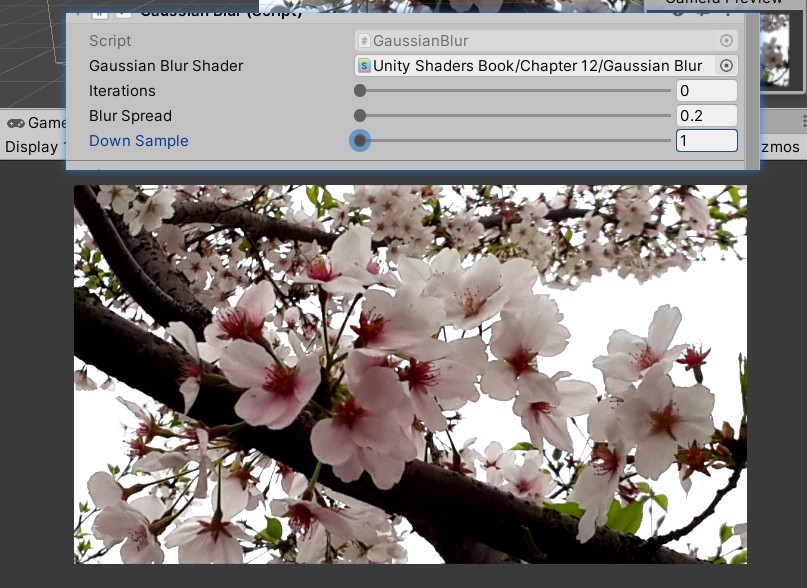

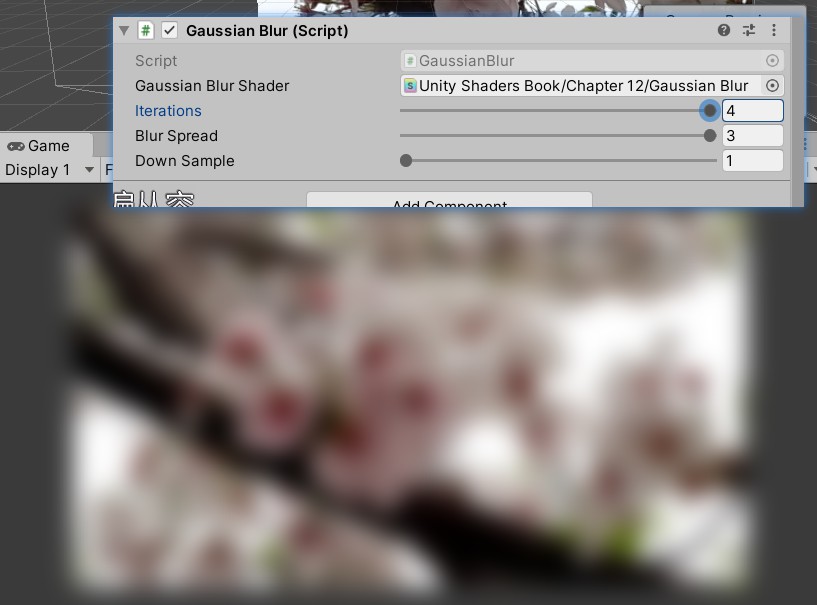

高斯模糊

模糊是卷积的另一个常见应用。

常见模糊:

均值模糊——卷积核各个元素值相等且和为1,即卷积后的像素值就是领域内像素值的平均值。

中值模糊——选择领域内对所有像素排序后的中值替换掉原颜色。

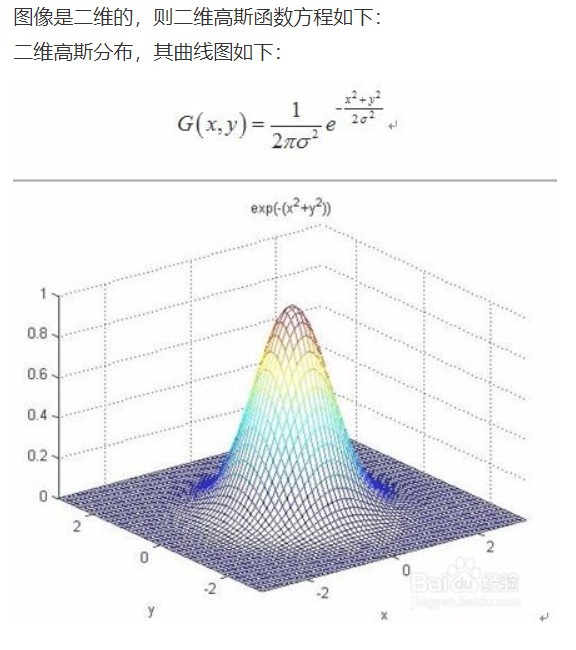

高斯模糊:利用高斯核——滤波核,每个元素的计算基于高斯方程:

σ是标准方差(一般取1),x和y分别对应当前位置到卷积核中心的整数距离,为了保证滤波后的图像不会变暗,还需要对高斯核中的权重进行归一化,保证权重和为1。高斯方程很好的模拟了领域每个像素对当前处理像素的影响程度,高斯核的维数越高,模糊程度越大。

可以把二维高斯函数拆成两个一维函数,使用两个一维的高斯核先后对图像进行滤波,还可能出现很多重复的权值,对于大小为5的一维高斯核,只需要记录三个权重值。

相机脚本:

using UnityEngine;

using System.Collections;

public class GaussianBlur : PostEffectsBase {

public Shader gaussianBlurShader;

private Material gaussianBlurMaterial = null;

public Material material {

get {

gaussianBlurMaterial = CheckShaderAndCreateMaterial(gaussianBlurShader, gaussianBlurMaterial);

return gaussianBlurMaterial;

}

}

// Blur iterations - larger number means more blur.

[Range(0, 4)]

public int iterations = 3;

// Blur spread for each iteration - larger value means more blur

[Range(0.2f, 3.0f)]

public float blurSpread = 0.6f;

[Range(1, 8)]

public int downSample = 2;//越大需要处理的像素就越少,过大会使图像像素化

/// 1st edition: just apply blur

// void OnRenderImage(RenderTexture src, RenderTexture dest) {

// if (material != null) {

// int rtW = src.width;

// int rtH = src.height;

// RenderTexture buffer = RenderTexture.GetTemporary(rtW, rtH, 0);

// // Render the vertical pass

// Graphics.Blit(src, buffer, material, 0);

// // Render the horizontal pass

// Graphics.Blit(buffer, dest, material, 1);

// RenderTexture.ReleaseTemporary(buffer);

// } else {

// Graphics.Blit(src, dest);

// }

// }

/// 2nd edition: scale the render texture

// void OnRenderImage (RenderTexture src, RenderTexture dest) {

// if (material != null) {

// int rtW = src.width/downSample;

// int rtH = src.height/downSample;

// RenderTexture buffer = RenderTexture.GetTemporary(rtW, rtH, 0);

// buffer.filterMode = FilterMode.Bilinear;

//

// // Render the vertical pass

// Graphics.Blit(src, buffer, material, 0);

// // Render the horizontal pass

// Graphics.Blit(buffer, dest, material, 1);

//

// RenderTexture.ReleaseTemporary(buffer);

// } else {

// Graphics.Blit(src, dest);

// }

// }

/// 3rd edition: use iterations for larger blur

void OnRenderImage (RenderTexture src, RenderTexture dest) {

if (material != null) {

//利用缩放对图像进行降采样,从而减少需要处理的像素个数

int rtW = src.width/downSample;

int rtH = src.height/downSample;

//因为高斯模糊需要调用两个Pass,需要使用一块中间缓存来存储第一个pass处理后的模糊结果

RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0);//分配一块与屏幕图像大小/downSample相同的缓冲区

buffer0.filterMode = FilterMode.Bilinear;//设置滤波模式

Graphics.Blit(src, buffer0);

for (int i = 0; i < iterations; i++) {//考虑了高斯模糊的迭代次数,利用两个临时缓存在迭代之间进行交替

material.SetFloat("_BlurSize", 1.0f + i * blurSpread);

RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

// Render the vertical pass

Graphics.Blit(buffer0, buffer1, material, 0);

RenderTexture.ReleaseTemporary(buffer0);//释放之前分配的缓存

buffer0 = buffer1;

buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

// Render the horizontal pass

Graphics.Blit(buffer0, buffer1, material, 1);

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

}

Graphics.Blit(buffer0, dest);

RenderTexture.ReleaseTemporary(buffer0);

} else {

Graphics.Blit(src, dest);

}

}

}

shader:

Shader "Unity Shaders Book/Chapter 12/Gaussian Blur" {

Properties {

_MainTex ("Base (RGB)", 2D) = "white" {}

_BlurSize ("Blur Size", Float) = 1.0

}

SubShader {

CGINCLUDE//不需要包含在pass中,只需要在pass中直接指定需要使用的顶点着色器和片元着色器函数名即可,可以避免写相同的片元着色器

#include "UnityCG.cginc"

sampler2D _MainTex;

half4 _MainTex_TexelSize;

float _BlurSize;//越大越模糊,但是过大会导致虚影

struct v2f {

float4 pos : SV_POSITION;

half2 uv[5]: TEXCOORD0;

};

v2f vertBlurVertical(appdata_img v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

half2 uv = v.texcoord;

o.uv[0] = uv;

o.uv[1] = uv + float2(0.0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[2] = uv - float2(0.0, _MainTex_TexelSize.y * 1.0) * _BlurSize;

o.uv[3] = uv + float2(0.0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

o.uv[4] = uv - float2(0.0, _MainTex_TexelSize.y * 2.0) * _BlurSize;

return o;

}

v2f vertBlurHorizontal(appdata_img v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

half2 uv = v.texcoord;

o.uv[0] = uv;

o.uv[1] = uv + float2(_MainTex_TexelSize.x * 1.0, 0.0) * _BlurSize;

o.uv[2] = uv - float2(_MainTex_TexelSize.x * 1.0, 0.0) * _BlurSize;

o.uv[3] = uv + float2(_MainTex_TexelSize.x * 2.0, 0.0) * _BlurSize;

o.uv[4] = uv - float2(_MainTex_TexelSize.x * 2.0, 0.0) * _BlurSize;

return o;

}

fixed4 fragBlur(v2f i) : SV_Target {

float weight[3] = {0.4026, 0.2442, 0.0545};

fixed3 sum = tex2D(_MainTex, i.uv[0]).rgb * weight[0];//中间像素*权值

for (int it = 1; it < 3; it++) {

sum += tex2D(_MainTex, i.uv[it*2-1]).rgb * weight[it];//离中间像素最近的前后两个像素

sum += tex2D(_MainTex, i.uv[it*2]).rgb * weight[it];////离中间像素最远的前后两个像素

}

return fixed4(sum, 1.0);

}

ENDCG

ZTest Always Cull Off ZWrite Off

Pass {

NAME "GAUSSIAN_BLUR_VERTICAL"

CGPROGRAM

#pragma vertex vertBlurVertical

#pragma fragment fragBlur

ENDCG

}

Pass {

NAME "GAUSSIAN_BLUR_HORIZONTAL"

CGPROGRAM

#pragma vertex vertBlurHorizontal

#pragma fragment fragBlur

ENDCG

}

}

FallBack "Diffuse"

}

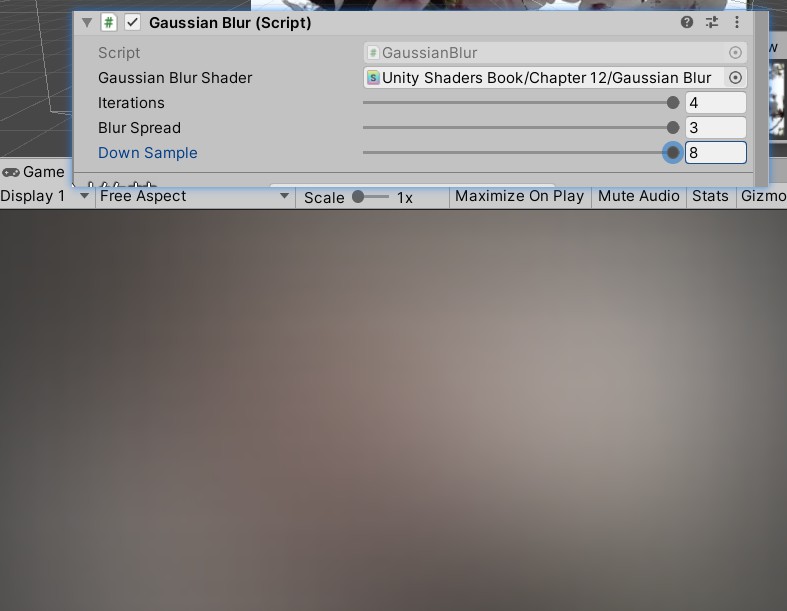

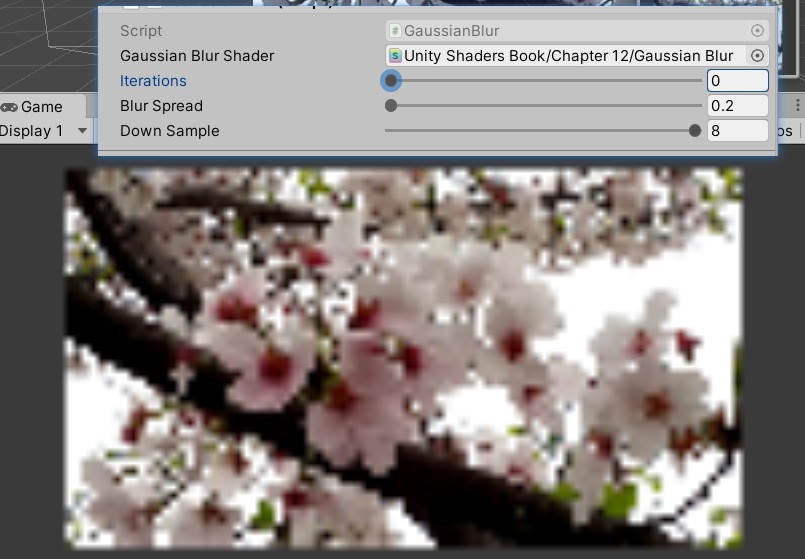

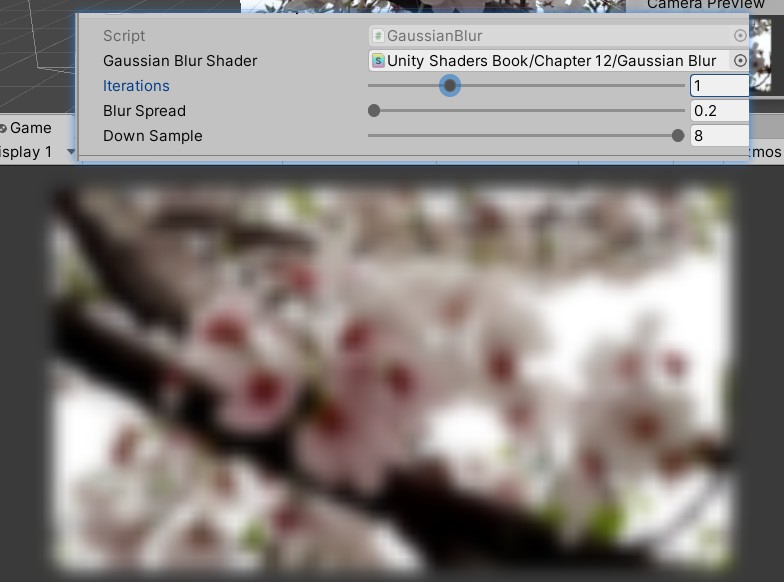

效果:

为Pass定义名字,可以在其他shader中直接通过名字来使用该Pass,而不需要重复编写代码

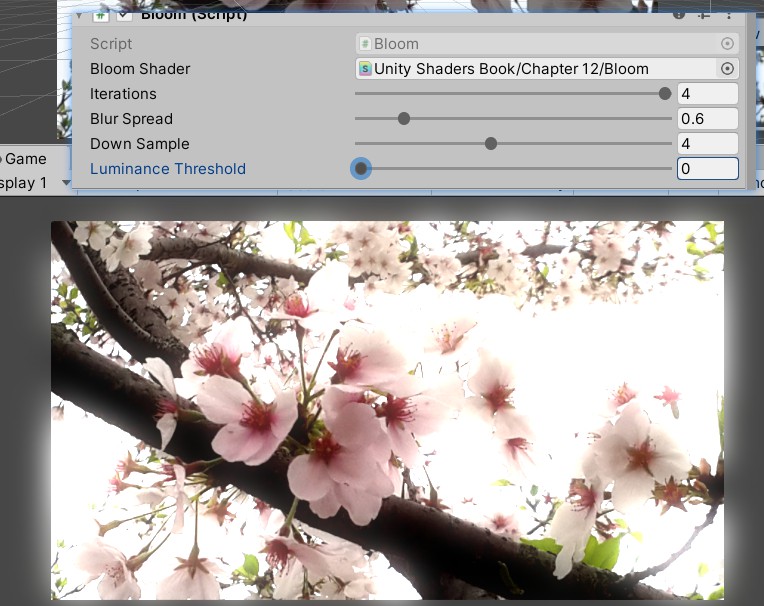

Bloom效果

使画面中较亮的区域“扩散”到周围的区域中,造成一种朦胧的效果。

实现原理:根据阈值提取出图像中较亮区域,存储在一张渲染纹理中,再利用高斯模糊对这张渲染纹理进行模糊处理,模拟光线扩散的效果,最后再将其和原图像混合,得到最终的效果。

相机脚本:

using UnityEngine;

using System.Collections;

public class Bloom : PostEffectsBase {

public Shader bloomShader;

private Material bloomMaterial = null;

public Material material {

get {

bloomMaterial = CheckShaderAndCreateMaterial(bloomShader, bloomMaterial);

return bloomMaterial;

}

}

// Blur iterations - larger number means more blur.

[Range(0, 4)]

public int iterations = 3;//提取的亮区阈值,如果开启了HDR,硬件会允许颜色值存储在更高精度范围的缓冲,此时像素的亮度值可能超过1,所以选择(0,4)

// Blur spread for each iteration - larger value means more blur

[Range(0.2f, 3.0f)]

public float blurSpread = 0.6f;

[Range(1, 8)]

public int downSample = 2;

[Range(0.0f, 4.0f)]

public float luminanceThreshold = 0.6f;

void OnRenderImage (RenderTexture src, RenderTexture dest) {

if (material != null) {

material.SetFloat("_LuminanceThreshold", luminanceThreshold);

int rtW = src.width/downSample;

int rtH = src.height/downSample;

RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0);

buffer0.filterMode = FilterMode.Bilinear;

Graphics.Blit(src, buffer0, material, 0);//使用第一个pass提取图像中较亮的部分

for (int i = 0; i < iterations; i++) {//高斯模糊的迭代

material.SetFloat("_BlurSize", 1.0f + i * blurSpread);

RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

// Render the vertical pass

Graphics.Blit(buffer0, buffer1, material, 1);//使用第2个pass

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0);

// Render the horizontal pass

Graphics.Blit(buffer0, buffer1, material, 2);//使用第3个pass

RenderTexture.ReleaseTemporary(buffer0);

buffer0 = buffer1;

}

material.SetTexture ("_Bloom", buffer0);

Graphics.Blit (src, dest, material, 3); //使用第4个pass进行最后的混合

RenderTexture.ReleaseTemporary(buffer0);

} else {

Graphics.Blit(src, dest);

}

}

}

shader:

Shader "Unity Shaders Book/Chapter 12/Bloom" {

Properties {

_MainTex ("Base (RGB)", 2D) = "white" {}

_Bloom ("Bloom (RGB)", 2D) = "black" {}

_LuminanceThreshold ("Luminance Threshold", Float) = 0.5//提取亮区的阈值

_BlurSize ("Blur Size", Float) = 1.0

}

SubShader {

CGINCLUDE

#include "UnityCG.cginc"

sampler2D _MainTex;//输入的渲染纹理

half4 _MainTex_TexelSize;

sampler2D _Bloom;//高斯模糊后的较亮区域

float _LuminanceThreshold;//阈值

float _BlurSize;

struct v2f {

float4 pos : SV_POSITION;

half2 uv : TEXCOORD0;

};

v2f vertExtractBright(appdata_img v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

o.uv = v.texcoord;

return o;

}

fixed luminance(fixed4 color) {

return 0.2125 * color.r + 0.7154 * color.g + 0.0721 * color.b; //亮度值

}

fixed4 fragExtractBright(v2f i) : SV_Target {

fixed4 c = tex2D(_MainTex, i.uv);

fixed val = clamp(luminance(c) - _LuminanceThreshold, 0.0, 1.0);//片元舍弃并把结果截取到(0,1)内

return c * val;//得到提取后的亮部区域

}

struct v2fBloom {

float4 pos : SV_POSITION;

half4 uv : TEXCOORD0;

};

v2fBloom vertBloom(appdata_img v) {

v2fBloom o;

o.pos = UnityObjectToClipPos (v.vertex);

o.uv.xy = v.texcoord;//原图像的纹理坐标

o.uv.zw = v.texcoord;//模糊后较亮区域的纹理坐标

#if UNITY_UV_STARTS_AT_TOP

if (_MainTex_TexelSize.y < 0.0)//平台差异化处理

o.uv.w = 1.0 - o.uv.w;

#endif

return o;

}

fixed4 fragBloom(v2fBloom i) : SV_Target {

return tex2D(_MainTex, i.uv.xy) + tex2D(_Bloom, i.uv.zw);

}

ENDCG

ZTest Always Cull Off ZWrite Off

Pass {

CGPROGRAM

#pragma vertex vertExtractBright

#pragma fragment fragExtractBright

ENDCG

}

UsePass "Unity Shaders Book/Chapter 12/Gaussian Blur/GAUSSIAN_BLUR_VERTICAL"//pass的名字都会自动存储为大写

UsePass "Unity Shaders Book/Chapter 12/Gaussian Blur/GAUSSIAN_BLUR_HORIZONTAL"

Pass {

CGPROGRAM

#pragma vertex vertBloom

#pragma fragment fragBloom

ENDCG

}

}

FallBack Off

}

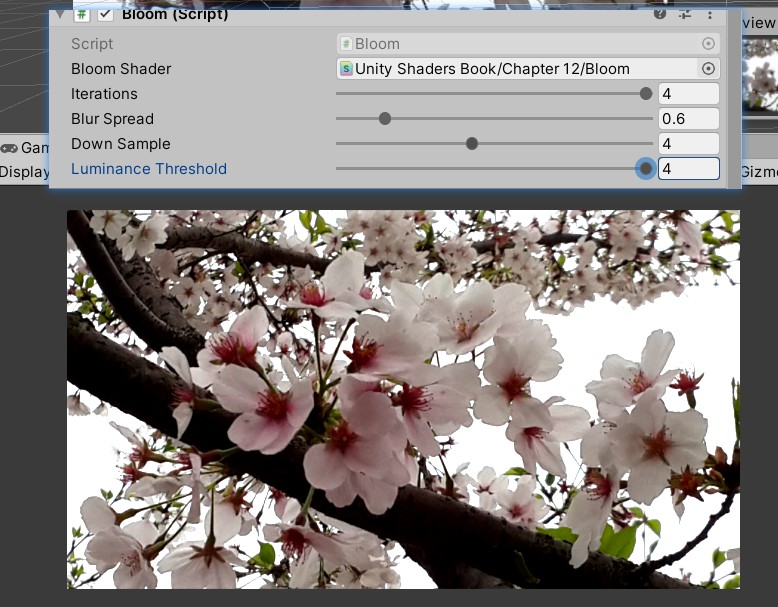

效果:

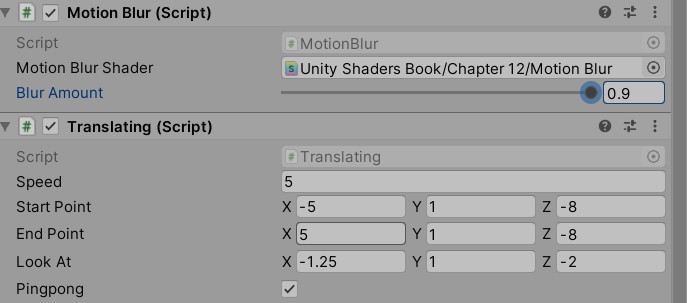

运动模糊

运动模糊的实现方法有多种,一种实现方法是利用一块累计缓存(accumulation buffer)来混合多张连续的图像。当物体快速移动产生多张图像后,取他们之间的平均值作为最后的运动模糊图像,但性能消耗很大。

另一种应用广泛的方法是创建和使用速度缓存(velocity buffer),这个缓存中存储了各个像素当前的运动速度,然后利用该值来决定模糊的方向和大小。

使用第一种方式:不需要在一帧中把场景渲染多次,但是需要保存之前的渲染结果,不断把当前的渲染图象叠加到之前的渲染图像中,从而产生一种运动轨迹的视觉效果,这种方法比原始的利用累计缓存的性能更好,但是模糊效果可能会有影响。

相机脚本:

using UnityEngine;

using System.Collections;

public class MotionBlur : PostEffectsBase {

public Shader motionBlurShader;

private Material motionBlurMaterial = null;

public Material material {

get {

motionBlurMaterial = CheckShaderAndCreateMaterial(motionBlurShader, motionBlurMaterial);

return motionBlurMaterial;

}

}

[Range(0.0f, 0.9f)]

public float blurAmount = 0.5f;//越大拖尾效果越明显

private RenderTexture accumulationTexture;//保存之前图像叠加的结果

void OnDisable() {//下一次应用时重新叠加图像

DestroyImmediate(accumulationTexture);

}

void OnRenderImage (RenderTexture src, RenderTexture dest) {

if (material != null) {

// Create the accumulation texture

if (accumulationTexture == null || accumulationTexture.width != src.width || accumulationTexture.height != src.height) {

DestroyImmediate(accumulationTexture);

accumulationTexture = new RenderTexture(src.width, src.height, 0);

accumulationTexture.hideFlags = HideFlags.HideAndDontSave;//不会显示在hierarchy中也不保存在场景里。

Graphics.Blit(src, accumulationTexture);//初始化这张图

}

// We are accumulating motion over frames without clear/discard

// by design, so silence any performance warnings from Unity

accumulationTexture.MarkRestoreExpected();//表明需要进行一个渲染纹理的恢复操作

//恢复操作发生在渲染到纹理,而该纹理没有被提前清空或销毁的情况下。

material.SetFloat("_BlurAmount", 1.0f - blurAmount);

Graphics.Blit (src, accumulationTexture, material);//进行混合

Graphics.Blit (accumulationTexture, dest);

} else {

Graphics.Blit(src, dest);

}

}

}

shader:

混合连续帧之间的图像,这样得到一张具有模糊拖尾的图像,当运动速度过快时,这种方法会造成单独的帧图像变得可见。

Shader "Unity Shaders Book/Chapter 12/Motion Blur" {

Properties {

_MainTex ("Base (RGB)", 2D) = "white" {}

_BlurAmount ("Blur Amount", Float) = 1.0

}

SubShader {

CGINCLUDE

#include "UnityCG.cginc"

sampler2D _MainTex;

fixed _BlurAmount;

struct v2f {

float4 pos : SV_POSITION;

half2 uv : TEXCOORD0;

};

v2f vert(appdata_img v) {

v2f o;

o.pos = UnityObjectToClipPos(v.vertex);

o.uv = v.texcoord;

return o;

}

//用于更新渲染纹理的RGB通道

fixed4 fragRGB (v2f i) : SV_Target {

return fixed4(tex2D(_MainTex, i.uv).rgb, _BlurAmount);//对当前图像进行采样,并把透明设置方便后面混合

}

//更新渲染纹理的A通道

half4 fragA (v2f i) : SV_Target {

return tex2D(_MainTex, i.uv);//维护渲染纹理的透明度,不受混合的影响。

}

ENDCG

ZTest Always Cull Off ZWrite Off

Pass {

Blend SrcAlpha OneMinusSrcAlpha//利用透明度混合RGB

ColorMask RGB

CGPROGRAM

#pragma vertex vert

#pragma fragment fragRGB

ENDCG

}

Pass {

Blend One Zero

ColorMask A

CGPROGRAM

#pragma vertex vert

#pragma fragment fragA

ENDCG

}

}

FallBack Off

}

效果:

这里还使用了一个脚本来使相机动起来,以此来看出效果:

using UnityEngine;

using System.Collections;

public class Translating : MonoBehaviour {

public float speed = 10.0f;

public Vector3 startPoint = Vector3.zero;

public Vector3 endPoint = Vector3.zero;

public Vector3 lookAt = Vector3.zero;

public bool pingpong = true;

private Vector3 curEndPoint = Vector3.zero;

// Use this for initialization

void Start () {

transform.position = startPoint;

curEndPoint = endPoint;

}

// Update is called once per frame

void Update () {

transform.position = Vector3.Slerp(transform.position, curEndPoint, Time.deltaTime * speed);

transform.LookAt(lookAt);

if (pingpong) {

if (Vector3.Distance(transform.position, curEndPoint) < 0.001f) {

curEndPoint = Vector3.Distance(curEndPoint, endPoint) < Vector3.Distance(curEndPoint, startPoint) ? startPoint : endPoint;

}

}

}

}